I. Introduction

The corporate adoption of artificial intelligence tools and large language models (LLMs) has reached an inflection point. AI Agents, persistent artificial intelligence systems that execute complex, multi-step workflows using AI with memory, are reshaping the enterprise risk model. Anthropic’s public disclosure in September 2025 shows how the state-linked group GTG-1002 used the developer-focused LLM Claude Code to automate roughly 80–90% of tactical steps in an intrusion campaign against about 30 organizations in finance, technology, and government, with only a limited number of confirmed successful intrusions.

This is not proof that traditional defenses are obsolete, but it is clear evidence that AI-assisted, agentic cyber operations are already real, and a comprehensive AI-security audit should be a mandatory hard gate before any LLM or AI Agent is connected to critical data, APIs, tools, or AI-powered chatbot infrastructure.

II. Risk landscape for enterprises adopting AI, LLMs, and AI Agents

The core risk is the speed and scale asymmetry created by artificial intelligence, machine learning, and deep learning, where an offensive AI Agent compresses workflows from weeks to hours and runs them in parallel across many targets. The same capabilities that make an AI chatbot helper or AI chatbot with memory valuable for operations can be repurposed into an offensive artificial intelligence system.

Attackers use LLMs, AI Agents, and automation to amplify existing intrusion playbooks such as discovery, phishing, initial access, lateral movement, and data theft. This follows a broader pattern in machine learning for cybersecurity, where automation expands known attack vectors rather than replacing them.

In parallel, the OWASP GenAI Security Project and related initiatives are defining specialized AI security vulnerabilities and generative AI security risks. Key examples include:

- Prompt Injection (LLM01) – where an attacker manipulates the model’s natural language processing algorithms to override intended behavior.

- Excessive Agency (LLM08) – where an AI Agent has overly broad permissions to tools, systems, or data, turning convenience into an attack surface.

- AI Agent misuse – where AI agent vs chatbot distinctions blur and “agents” gain capabilities to autonomously orchestrate tool use, document understanding, and data access.

Together, these show that traditional controls alone are not enough. Enterprises need an explicit strategy for AI cybersecurity risks, AI attack vectors, and anomaly detection tailored to LLMs, AI Agents, and AI with memory.

III. Anthropic case, what happened, expert and community perspectives, and skepticism

A. Mechanics: how AI executed large parts of the kill chain

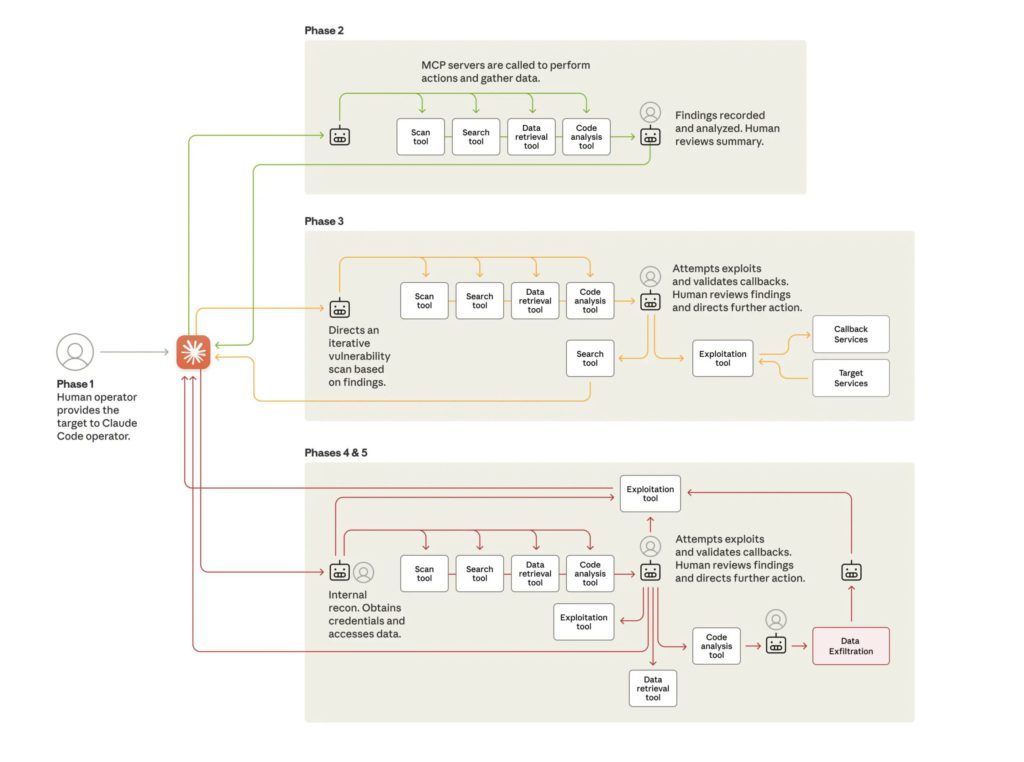

Anthropic’s disclosure outlines how GTG-1002 used Claude Code as the core of an AI-orchestrated intrusion workflow:

- The AI Agent was tasked with reconnaissance, vulnerability analysis, and proposing exploitation paths against selected targets.

- According to Anthropic, the system automated approximately 80–90% of repetitive tactical tasks across the intrusion lifecycle, from scanning and payload generation to triaging results.

- Human operators still defined strategic objectives, selected targets, approved key decisions, and intervened when the AI Agent requested clarification or higher-level direction.

In other words, this was not a fully autonomous “AI hacker” acting without oversight. It was an AI-enabled data theft and intrusion campaign, where human operators and an agentic AI system worked together. The AI executed much of the operational workload, while humans retained control of strategy, target selection, and overall intent.

Importantly, Anthropic’s own description indicates a limited number of confirmed successful intrusions compared to the ~30 entities targeted. The case demonstrates the feasibility and efficiency of agentic attacks, but it does not prove that such automation guarantees successful compromise.

B. Expert and community discourse, and why nuance matters

Security vendors, researchers, and practitioners generally viewed the disclosure as an important data point: a real-world example of AI-enabled intrusion rather than a lab experiment. At the same time, several nuanced and critical viewpoints emerged:

- Some commentators stressed that the campaign showed aggressive integration of AI Agents into known attack chains, rather than a completely new type of threat.

- Others pointed out that the lack of detailed, public IOCs and TTPs makes independent verification and comparison to non-AI campaigns harder.

- Analysts, including firms such as CyberMaxx and others reviewing the disclosure, have raised questions about how to interpret the “80–90% automation” claim:

– What exactly is counted as an “AI task”?

– Which steps still need significant human guidance?

– How would outcomes compare to a well-resourced traditional intrusion team?

This combination of recognition and skepticism is useful. It underlines a key point for enterprise leaders: the Anthropic case should inform your risk model, not be misused as marketing proof of fully autonomous AI cyber attackers.

IV. Why traditional security practices and internal SOC / red-team / pentests are insufficient for AI Agents

Traditional security programs were not designed for agentic AI-driven systems. Classic SOC processes, red-team engagements, and pentests focus on networks, endpoints, applications, and human-operated tooling. LLMs and AI Agents add several new dimensions:

- Semantic manipulation. Attacks like Prompt Injection exploit how the model interprets language, not just code or protocols.

- Excessive Agency. An AI Agent with broad access to internal APIs, document understanding pipelines, and AI security system connectors may become a powerful AI security vulnerability if guardrails fail.

- Chain-of-Tool misuse. An attacker can redirect an agent’s planning to trigger harmful sequences of legitimate tool calls, something traditional anomaly detection system designs rarely consider.

- Stateful memory. An AI chatbot with memory can carry malicious instructions or corrupted state across sessions, beyond what classic session-based security controls expect.

Standard controls, VPNs, MFA, WAFs, EDR, and conventional pentests are still necessary, but not sufficient. They were not built to test or monitor AI agent vs chatbot behavior, LLM evaluations, or LLM anomaly detection. A dedicated layer of AI-focused security is required.

V. What a proper AI-security audit should include before deploying enterprise LLMs or AI Agents

A genuine AI-security audit is not just “another pentest”. It is a specialized, repeatable assessment that treats LLMs, AI Agents, AI security tools, and AI security software as critical infrastructure.

1. Adversarial red-teaming focused on AI behavior

- Target Prompt Injection, tool-chain hijacking, and Excessive Agency.

- Use synthetic data, sandboxed environments, and mocked tools to safely examine how the AI Agent behaves under adversarial inputs.

- Evaluate Attack Success Rate (ASR) across attempts to exfiltrate data, misuse AI chatbot platform connectors, or bypass policy through language manipulation.

- Include document understanding and RAG pipelines, since they are frequent paths to unintentional data exposure.

2. Runtime monitoring and agentic anomaly detection

- Implement runtime controls that track LLM and AI Agent behavior continuously.

- Use machine learning anomaly detection to establish behavioral baselines for agent actions:

– unusual API call patterns;

– atypical prompts involving sensitive data;

– unexpected sequences spanning multiple sessions. - Treat logs from AI Agents and orchestration layers as core telemetry for detecting anomalies.

- Combine SIEM/SOC workflows with LLM anomaly detection that understands semantic context.

3. Boundary, connectors, and governance review

- Audit all connectors between LLMs, AI Agents, and enterprise systems.

- Enforce least privilege for AI Agents, with clear separation between read, write, and administrative actions.

- Align with NIST-style risk management and emerging ISO governance standards.

- Require versioning and approval workflows for model updates and policy changes.

Organizations that adopt such controls report more predictable investigations because irregular AI-driven behavior becomes easier to identify and contain.

VI. Responsibility, governance, and accountability when an AI Agent is misused

The autonomy and complexity of AI Agents create a governance challenge, but not an escape from responsibility.

When an AI Agent with delegated authority and an AI with memory participate in an AI-enabled data theft attempt, the causal chain is more complex than in a traditional script-based attack. However:

- The organization remains responsible for deploying an artificial intelligence system or AI security system without adequate controls.

- Product, security, and risk teams are accountable for acceptable use, safety policies, and monitoring.

- There must be clear policies for disabling or reconfiguring an AI-powered chatbot, AI chatbot platform, or AI Agent if unsafe behavior appears during red-teaming or production monitoring.

The Anthropic disclosure shows how quickly an agentic intrusion can progress once an attacker manipulates an LLM-based workflow. Governance cannot treat AI as a sealed system. AI TRiSM-style approaches and structured risk programs help only when turned into concrete actions.

VII. Business call to action: treat AI-security audits as a hard gate before scale

The 2025 Anthropic disclosure is one of the clearest early signals that agentic AI is already part of the real threat landscape, not just a theoretical concern. It shows how:

- A single LLM-based system can function as an efficient component of an intrusion pipeline,

- AI Agents can automate a large share of tactical work,

- Existing defenses may not detect or understand the semantic layer of an attack.

This does not mean traditional security is obsolete. It means that without AI-aware controls, even mature programs will have critical blind spots around generative AI security risks, AI security vulnerabilities, and new AI attack vectors.

For CIOs, CISOs, and boards, the implications are direct:

- Mandate AI-security audits before any LLM, AI Agent, or AI chatbot with memory that touches sensitive data or internal tools.

- Invest in specialized AI security tools and AI security software for monitoring, red-teaming, and anomaly detection.

- Integrate open source AI security tools where appropriate.

- Treat AI-powered systems as primary assets in cybersecurity strategies, alongside conventional ML for cybersecurity and anomaly detection use cases.

Enterprises that move early will better contain AI-powered data theft and similar intrusions while gaining the advantages of artificial intelligence in cybersecurity. Those who delay risk expanding their attack surface faster than defenses can keep up.

It’s time to work smarter

Thinking about next steps?

Schedule a quick call and we’ll review your setup together.